Chapter 2 Frog Families & HMMs

Previously, we wanted to study the weather. Let’s up the stakes and now look at how the weather can determine whether or not a cute, infinitely reproducing, frog-family survives the month.

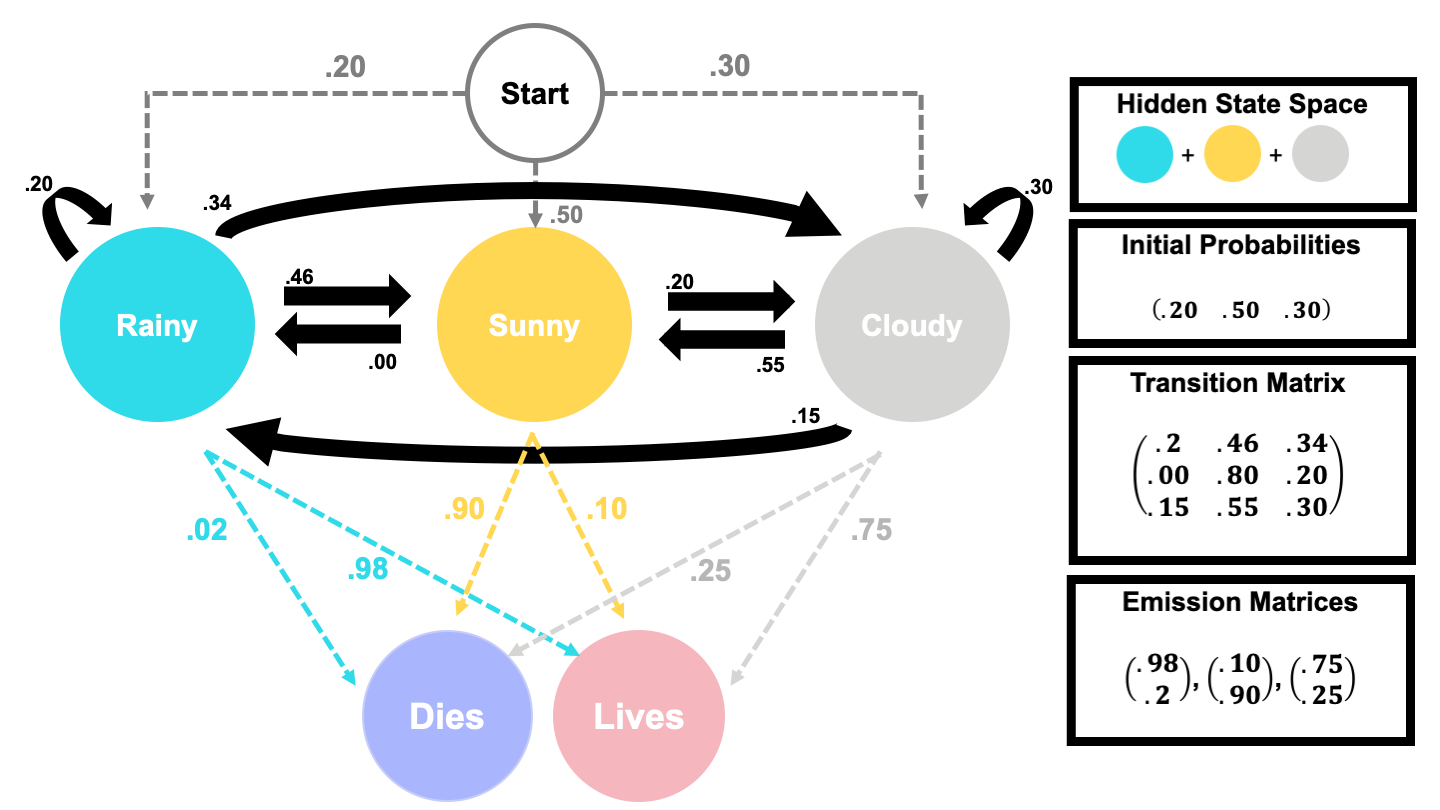

If you were a frog, you wouldn’t be able to check the weather before leaving your tree hide– you wouldn’t even be able to read!– but you do know that when a family member leaves for their daily hop, their chance of living depends on the weather. If the weather is

- Sunny: they would have a 10% chance of living (and eating a nice bug) with a 90% chance of dying.

- Cloudy: they would have a 75% chance of living (and eating a nice bug) with a 25% chance of dying.

- Rainy: they would have a 98% chance of living (and eating a nice bug) with a 2% chance of dying.

Since we, as non-frog statisticians, know that the weather is a Markov Chain, we can say the survival of this frog-family is actually a Hidden Markov Model. In the following sections, we will establish what that implies theoretically for us & our frog family.

2.2 Algorithms

Let’s say the frog family has been really lucky, and all the frogs who left the tree hide in the past week came back! One very curious frog named Betsy wonders what the probability of all her family surviving is, given the weather.

We can answer Betsy’s questions using likelihood calculations or the Forward-Backward Algorithm.

Likelihood

Likelihood or Posterior Probability are commonly defined as the probability of the data, given a true underlying parameter5.

Let be the hidden states of the weather in the past week, and let be our observations on whether or not the frogs came back alive. This implies

In this case, the likelihood would be the probability of our observed , given some true underlying set of . This is computed below.

NOTE: The capital pi () means to multiply a series indexed by .

If we knew the states of the past week were Sunny, Cloudy, Rainy, Rainy, Rainy, Rainy, Rainy and everyone came back alive. We’d compute the likelihood as

Bad News: We can’t truly know the states of the ! Bummer.

Because of this, we would need to add together all likelihoods for all possible states for each event, weighted by their probability6 . More succinctly, we intend to find the likelihood of all observations , given the probability of all states . We can do this with the rules of joint probability we covered in the previous section.

Now, we sum up all the observations given.

So, for our 7 consecutive live frogs, this would be

That is extremely difficult to do when we the number of our states () are numerous or we have some arbitrarily large number observations, , since we’d be analyzing different possible sequences. However, we can predict the hidden states of HMMs like this one, using the Forward-Backward Algorithm.

The Forward-Backward Algorithm

The Forward-Backward Algorithm is a dynamic programming algorithm used to infer the probability of seeing observations in a Hidden Markov Model. It contains two sub-algorithms, the Forward Algorithm & the Backward Algorithm, which respectively compute the probability of the data from the beginning and the end of observations until they collide and result in a total probability of the data7. The process may seem extra, but as you’ve seen in the last section’s toy computation, we have to be extra out of necessity.

NOTE: The following sections heavily drawn from the following sources 8 9 10 11

Forward

In the first section of the Forward-Backward Algorithm, we compute the forward probabilities. Forward probabilities are informally the joint probability of all observations so far and the probability of being in some given state space.

Formally, a forward probability is defined as > NOTE: That is, we compute the probability of the first observations () leading us to our current observation while also being in state , .

To compute the forward probabilities, we must

- Sum over all previous forward probabilities that would lead us to be in

- Multiply by the transition probabilities describing the change from the previous state to the current state .

- Multiply again by the emission probabilities of our current observation , given the state . That is .

Formally, this is written as

Note: Remember that is the state space!

In practice, we typically use recursion to compute the probability of our observations. Like so

1.) Base Case: We use the initial probability of our system’s states.

2.) Recursion: We use the previous case & transition & emission probabilities to compute the probability of being in the newest state .

2.) End: We can then define the probability of the observations as the recursive sum of all forward probabilities.

Backward

In the second section of the Forward-Backward Algorithm, we compute the backward probabilities. Backward probabilities are informally the joint probability of successive observations and the probability of being in some arbitrary state , given that we start an observation .

Formally, a backward probability is defined as

To compute the backward probabilities, we must - Sum the successive values after until the end of the k-observations at . - Multiply by the transition probabilities describing the change from the given current state to the next state . - Multiply again by the emission probabilities of the success observation . That is .

Formally, this is written as

Note: Remember that is the state space!

In practice, we typically use recursion to compute the probability of our observations. Like so

1.) Base Case: We use the initial probability of our system’s states. The value is 1 since we’re working backward!

2.) Recursion: We use the previous transition & emission probabilities of being in the previous state from the state to weigh the sum of all success values.

2.) End: We can then define the backward probability of the observations as the recursive sum of values after , beginning with the end value.

In the end, we’ve done the same thing! But now, we can use the probabilities of our observations to study the distribution of the hidden states.

Collision

We can use Bayes’ Rule, joint probability, and our previous work to compute the probability of being in a state at a given time, . This is commonly called a smoother analysis. Like before, we will use the notation of our frog example to describe the states () and observations ().

By Bayes’ Rule, the probability of being in state, , given the observations, is

However, we can make this simpler by acknowledging that is proportional (e.g., division by a constant value) to the joint probability of and . So,

We can simplify this even further by splitting our observations at the observation, like

Then,

We recognize these as the backward probability times the forward. We include our constant value .

Note: We’ve multiplied by the constant value of because the marginal distribution of the would be the sum of the probabilities of the observations for each possible state, by the Law of Total Probability.

Thus, the probability of being in a given state , at a particular time, , given the data is

We did it! Intuitively, it might not make sense why we can compute the probability of being at a state by multiplying the backward & forward probabilities, then dividing by the possible states.

The idea is that the recursive functions “scan” through the observations, determining some probability of the next or previous outcome, until they collide and essentially collapse onto a probability of that observation. They do this for each possible observation. Then, by dividing the total probability of the observations, we can get the probability of the -state.

2.3 Conclusion

We did it! We’ve learned how to compute the probability of the observations & the probability of a particular state, given a time, using the Forward-Backward Algorithm. There are, of course, other problems that Hidden Markov Models can solve, like finding the most probable sequence of all states or how to maximize the functions forward or backward functions.

However, the Forward-Backward Algorithm has so many meaningful applications throughout the natural & social sciences alone. We’ll look at a couple in the next chapter.

If you have any lingering questions, I’ve linked some great YouTube videos that may be helpful below.