Chapter 1 Mathematical Intuition

Markov Chains are cool! Hidden Markov Models are also cool, but require more preparation! In this section, we’ll go through conditional probabilities & set up the basis to study Hidden Markov Models by getting comfortable with chains first.

1.1 Conditional Probability & Bayes Rule

Basic Concepts

Probability, as a field, formalizes how we predict events with some equally beautiful & ugly notation, intuitive concepts, and complex mathematical principles. But the premise is simple: by ascribing a numeric value to the outcomes of an event, we can abstract the real world and study it with math.

The process of ascribing numeric values to the outcome of an event is called mapping & by mapping all possible probabilities of an event’s outcomes, we create a random variable.

NOTE: This can be confusing! The “random” part of the word doesn’t mean all outcomes have an equal chance of happening; really, it means that within an event, there are multiple possible outcomes.

Example: Weather & Temperature

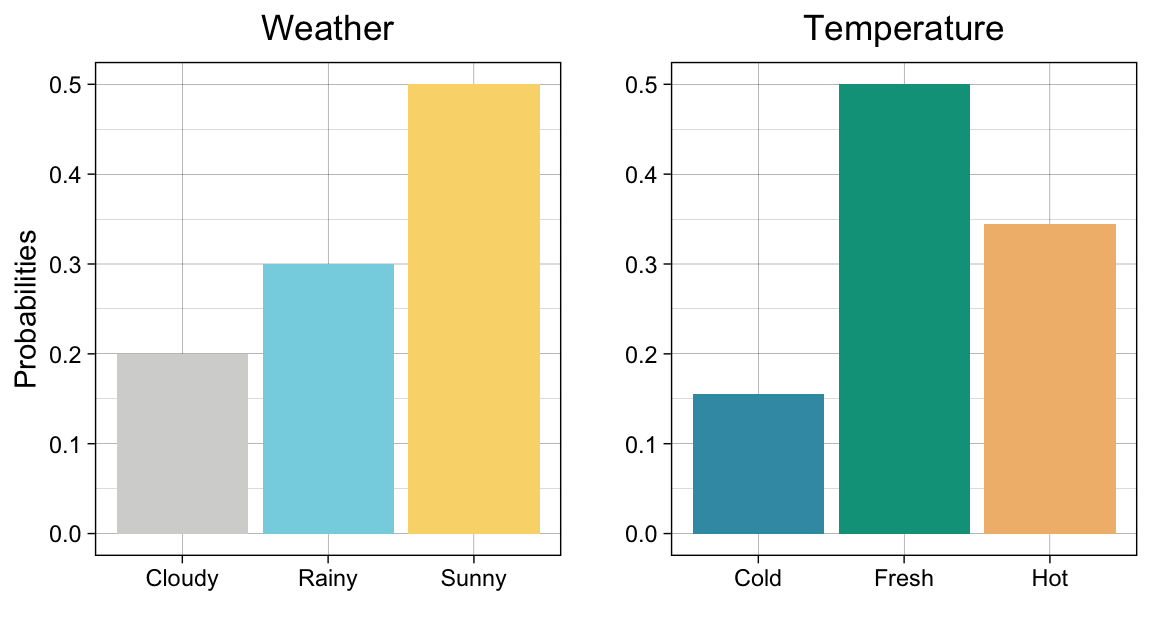

Let’s say that the weather is the event, , whose only outcomes are sunny (), rainy () or cloudy(). By mapping numeric values (e.g., probabilities) to the outcomes, we can turn into a random variable. Below, we list all mappings in the probability mass function, .

NOTE: The sub-probability of all probability mass functions must sum to 1.

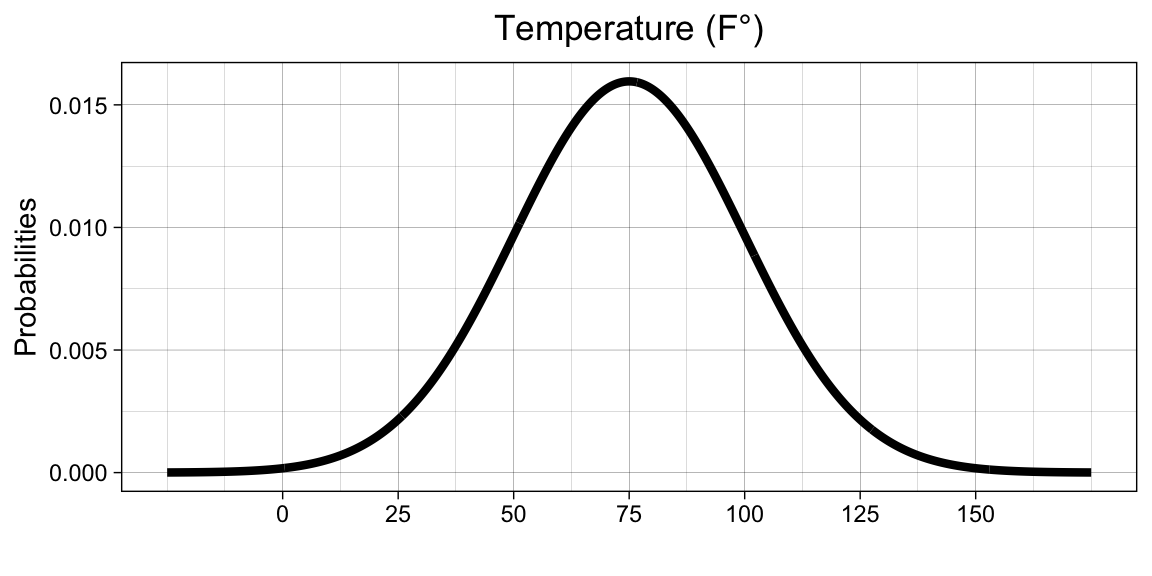

Now, let’s see that the daily temperature (F°) is the event . Normally, we could say that follows a normal distribution with and , since its a continuous variable. So, and would look something like this

Instead, let’s say that is a discrete random variable whose only potential outcomes are cold (), fresh (), or hot (). Then, has the probability mass function

Below are two graphs summarizing what we know so far about and

But what happens if the weather depends on the temperature?

Conditional Probabilities

Let’s study the two arbitrary events , & learn some definitions about probability.

Conditional Probability:

The conditional probabilities of ’s, given and , given are written below.

Independence: We say that the two events, & are independent if the conditional probabilities provide us no new-information about either event. So,

Joint Probability: The probability of two events, & happening at the same time is called a joint probability and is typically denoted by . It is calculated below.

It’s generally true that the weather on a particular day, depends on the temperature. This implies that and are conditional events with conditional probabilities. So,

Bayes’ Rule & LOTP

Conceived by Reverend Thomas Bayes in the 18th Century, posthumously published by his friend Richard Price, and then formalized into an equation by Pierre-Simon Laplace, Bayes’ Rule is a cornerstone equation in modern statistics & probability1. I’ve written Bayes Rule below2

NOTE: Above you’ll notice that we’re looking at the probability of & , divided by the probability of . We are dividing by to normalize & isolate the probability of , under the conditions we observe .

Quick Check: If & are independent, how would you further simplify the numerator of Bayes’ Rule?

The denominator of Bayes’ Rule, is called the marginal probability of .

The marginal probability can either be

- given

- computed with the law of total probability or (LOTP).

LOTP states that

Note: Looks scary! Really it’s like calculating the probability of in each subcategory of , multiplying by the probability of that sub-, then adding it all together.

1.2 Markov Chains

Stochastic processes are events that have some element of randomness in their outcomes. The amount of ‘randomness’ & the type of events can vary depending on the context. In turn, studying the properties of stochastic processes often requires many different techniques which go well beyond the boundaries of statistics. And with a litany of applications across so many domains of knowledge, studying stochastic processes also involves many techniques from Physics, Linguistics, Sociology, Public Health, Geography & more.

Note: There is some nuance in the language we use to describe randomness, probabilistic, and stochastic, but it is murky. So, for now, let’s stick with the above definition & enjoy some interchangeability between random, stochastic, and probabilistic.

So, this section will only be a tiny snippet of the wide topics covered in studying stochastic processes.

Intuition

Markov chains are a subclass of stochastic processes that describe partially random events occurring in succession, typically in succession. We will formalize this definition soon, but for now, let’s talk about the weather.

Example: Weather

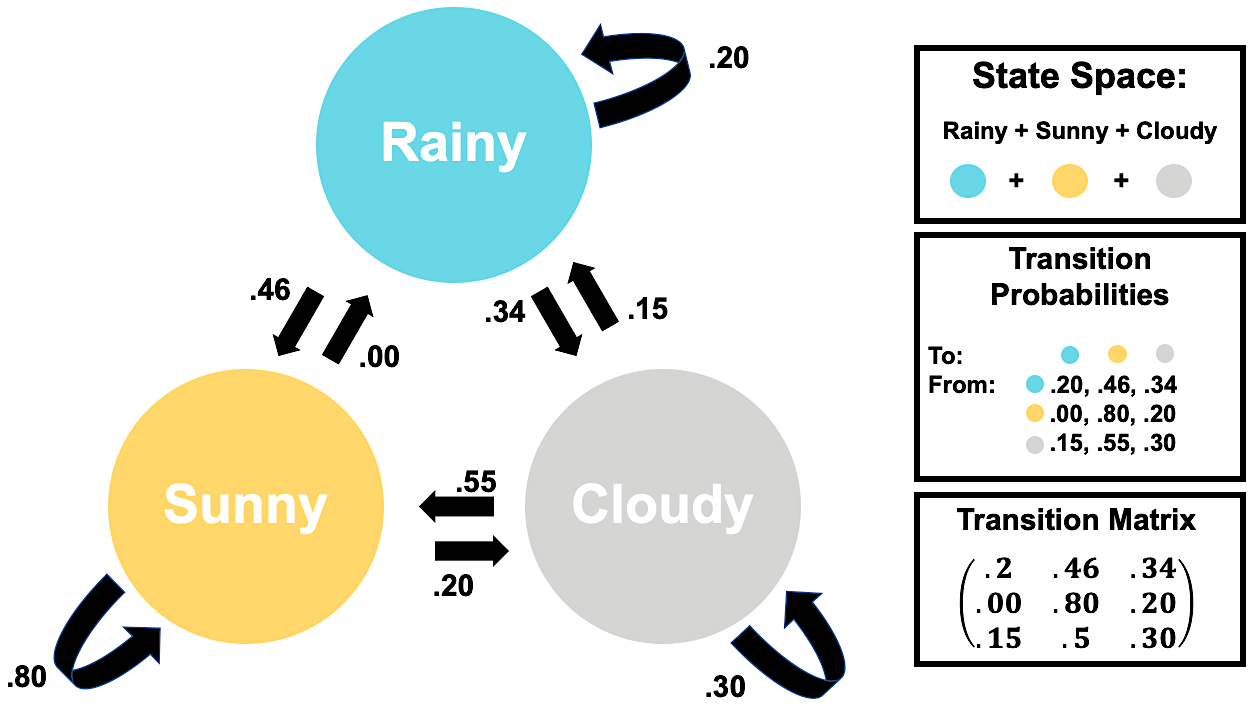

Let’s ignore the fact that temperature determines weather. Instead, let’s assume that we can compute the probability of tomorrow’s weather by only looking at today’s. This results in three important ideas:

- General weather patterns before today are irrelevant when predicting tomorrow’s weather. This is an example of a Markov process, more specifically a Markov Chain.

- The outcomes of the weather are rainy, sunny, or cloudy. These are examples of states.

- The probability of tomorrow’s weather depends on whether it was rainy, sunny, or cloudy to day. Meaning there are probabilities associated with tomorrow’s events, strictly defined by today’s. These are examples of transition probabilities.

Let’s be rigorous now!

Mathematical Definitions

Let be a collection of successively indexed events.

The discrete are a Markov Chain if

- They exhibit a Markov Property.

- Where the probability of some new or predicted event is

NOTE: This is similar to the conditional probability of independent events! But instead we specify that prior events are independent of the outcome of , but is not.

- There is some countable set of outcomes called the State Space which contains every possible outcome or State

- The outcomes are all common elements of the state space, .

NOTE: This may seem complicated, but this means we can define what are possible and impossible outcomes.

- The probability of changing states is a Transition Probability and if we were to write them out for each state, they would sum to .

- Transition Probabilities sum to 1 because they cumulatively define the probability mass function of the transition from each state to another.

- These probabilities can be placed in a Transition Matrix where the columns indicate a next state & the rows indicate the current state.

NOTE: The above definitions were cumulatively drawn from the following sources 3 4

Visualizations

Weather Graphs

Below we’ve redefined our weather example as a Markov Chain, using our new definitions, and place it into a Weighted Directed Graph. Isn’t she pretty! Note that the weights on our arrows correspond to the transition probability associated with that change-arrow.

Also, check out that Transition Matrix! It’s the first one you’ve looked at, but they can quickly get very complex.

Weather Animations

To simulate how a Markov Chain of weather behaves, let’s animate it!

In this visualization, there are 10 multicolored dots representing different arbitrary days colored by that particular day’s weather. To see how Markov Chains evolve over 15 days, we shuffle them 15 times in a row according to our transition matrix

| Rainy | Sunny | Cloudy |

|---|---|---|

| .20 | .46 | .34 |

| .00 | .80 | .20 |

| .15 | .50 | .30 |

Written text describes the proportion of weather outcomes following simulation. That is, how many rainy, sunny, or cloudy days happen after a given shuffle.

Look at that! We converged on our initial probability distribution. That isn’t necessarily true for all simulations, but it’s true for this one.

NOTE: This Markov Chain visualization was designed by Will Hipson, a graduate student in Psychology at Carleton University. You can find links for reproduction at his page5 or check out my GitHub to see my edits.

1.3 Conclusion

We’ve covered the necessary probability concepts. In the next section, we’ll find out out how we can leverage Markov Chains to predict another set of variables, even when we can’t see the outcomes of our chain. Prepare for bivariate distributions, many subscripts, and a lot of summation notation!

If you have any lingering questions, I’ve linked some great YouTube videos that may be helpful below.

https://www.bayesrulesbook.com/chapter-1.html#a-quick-history-lesson↩︎

Lay, David C. 2012. “Applications to Markov Chains.” In Linear Algebra and Its Applications, 4th ed., 253–62. Boston: Pearson College Division.↩︎

https://willhipson.netlify.app/post/markov-sim/markov_chain/↩︎